What You should Do To Seek Out Out About Deepseek Before You're Left B…

페이지 정보

본문

Why is DeepSeek making headlines now? A big purpose why individuals do suppose it has hit a wall is that the evals we use to measure the outcomes have saturated. Have you met Clio Duo? As an illustration, Clio Duo is an AI function designed specifically with the distinctive wants of legal professionals in thoughts. Ready to explore AI constructed for authorized professionals? But past the financial market shock and frenzy it precipitated, DeepSeek’s story holds valuable classes-especially for authorized professionals. While DeepSeek makes it look as if China has secured a stable foothold in the future of AI, it's premature to say that DeepSeek’s success validates China’s innovation system as a complete. DeepSeek’s means to sidestep these monetary constraints alerts a shift in energy that could dramatically reshape the AI panorama. But this can also be because we’re hitting towards our potential to guage these fashions. Is AI hitting a wall?

Ilya Sutskever, co-founding father of AI labs Safe Superintelligence (SSI) and OpenAI, informed Reuters not too long ago that results from scaling up pre-coaching - the phase of coaching an AI mannequin that use s an unlimited quantity of unlabeled data to know language patterns and structures - have plateaued. Remember, dates and numbers are related for the Jesuits and the Chinese Illuminati, that’s why they released on Christmas 2024 DeepSeek-V3, a brand new open-supply AI language mannequin with 671 billion parameters skilled in round 55 days at a cost of solely US$5.Fifty eight million! Why is it laborious to speed up common CFGs? You'll have the option to sign up utilizing: Email Address: Enter your valid e-mail address. The research suggests that present medical board structures could also be poorly suited to address the widespread harm brought on by physician-spread misinformation, and proposes that a patient-centered strategy could also be insufficient to deal with public health issues. The gaps between the current models and AGI are: 1) they hallucinate, or confabulate, and in any long-sufficient chain of analysis it loses track of what its doing. The utility of synthetic knowledge just isn't that it, and it alone, will assist us scale the AGI mountain, but that it will help us transfer forward to constructing better and higher models.

Ilya Sutskever, co-founding father of AI labs Safe Superintelligence (SSI) and OpenAI, informed Reuters not too long ago that results from scaling up pre-coaching - the phase of coaching an AI mannequin that use s an unlimited quantity of unlabeled data to know language patterns and structures - have plateaued. Remember, dates and numbers are related for the Jesuits and the Chinese Illuminati, that’s why they released on Christmas 2024 DeepSeek-V3, a brand new open-supply AI language mannequin with 671 billion parameters skilled in round 55 days at a cost of solely US$5.Fifty eight million! Why is it laborious to speed up common CFGs? You'll have the option to sign up utilizing: Email Address: Enter your valid e-mail address. The research suggests that present medical board structures could also be poorly suited to address the widespread harm brought on by physician-spread misinformation, and proposes that a patient-centered strategy could also be insufficient to deal with public health issues. The gaps between the current models and AGI are: 1) they hallucinate, or confabulate, and in any long-sufficient chain of analysis it loses track of what its doing. The utility of synthetic knowledge just isn't that it, and it alone, will assist us scale the AGI mountain, but that it will help us transfer forward to constructing better and higher models.

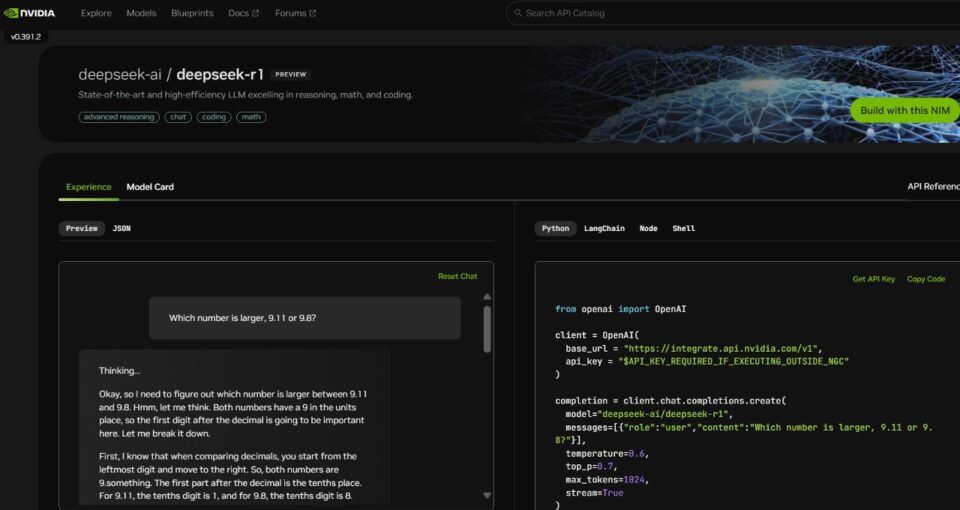

1 is way much better in authorized reasoning, as an illustration. Now we have simply started teaching reasoning, and to suppose via questions iteratively at inference time, somewhat than simply at coaching time. We now have multiple GPT-four class fashions, some a bit better and some a bit worse, but none that have been dramatically better the best way GPT-four was higher than GPT-3.5. It additionally does a lot significantly better with code opinions, not simply creating code. Other non-openai code fashions on the time sucked compared to DeepSeek-Coder on the examined regime (primary issues, library utilization, leetcode, infilling, small cross-context, math reasoning), and especially suck to their primary instruct FT. What seems possible is that features from pure scaling of pre-training appear to have stopped, which signifies that we've got managed to include as a lot information into the models per size as we made them larger and threw more data at them than we've been capable of in the past. When you've got enabled two-issue authentication (2FA), enter the code despatched to your email or telephone. Soon after, research from cloud security agency Wiz uncovered a significant vulnerability-DeepSeek had left one in every of its databases uncovered, compromising over 1,000,000 records, including system logs, consumer immediate submissions, and API authentication tokens.

The Financial Times reported that it was cheaper than its friends with a worth of two RMB for each million output tokens. In 5 out of 8 generations, DeepSeekV3 claims to be ChatGPT (v4), while claiming to be DeepSeekV3 only 3 times. There's also the fear that we've run out of knowledge. I’d encourage readers to offer the paper a skim - and don’t fear concerning the references to Deleuz or Freud and so forth, you don’t really need them to ‘get’ the message. This is in no way the one manner we know find out how to make models greater or higher. The CodeUpdateArena benchmark represents an necessary step ahead in assessing the capabilities of LLMs in the code generation area, and the insights from this analysis may help drive the event of extra sturdy and adaptable fashions that can keep pace with the quickly evolving software program landscape. This qualitative leap within the capabilities of DeepSeek r1 LLMs demonstrates their proficiency throughout a wide selection of applications.

The Financial Times reported that it was cheaper than its friends with a worth of two RMB for each million output tokens. In 5 out of 8 generations, DeepSeekV3 claims to be ChatGPT (v4), while claiming to be DeepSeekV3 only 3 times. There's also the fear that we've run out of knowledge. I’d encourage readers to offer the paper a skim - and don’t fear concerning the references to Deleuz or Freud and so forth, you don’t really need them to ‘get’ the message. This is in no way the one manner we know find out how to make models greater or higher. The CodeUpdateArena benchmark represents an necessary step ahead in assessing the capabilities of LLMs in the code generation area, and the insights from this analysis may help drive the event of extra sturdy and adaptable fashions that can keep pace with the quickly evolving software program landscape. This qualitative leap within the capabilities of DeepSeek r1 LLMs demonstrates their proficiency throughout a wide selection of applications.

- 이전글The Secret Guide To Vape Pen 25.02.24

- 다음글�߰����ǸŹ���ȸ�Ͽ���ϴ�. 25.02.24

댓글목록

등록된 댓글이 없습니다.